AI is no longer a side project.

It is now inside clinical notes, triage flows,authorizations, and patient messages.

When AI shapes care or operations, it becomes auditable.

Leaders must be able to explain what happened, who owned it,and what controls were in place.

This post gives hospital CEOs, COOs, CIOs, and CTOs a clear control model for 2026, across Switzerland and the EU.

It is built for real hospital life, not for a slide deck.

A lot of hospitals think they are “piloting AI.”

But AI is already there.

It is often embedded in tools youalready pay for. It appears as “smart” features, auto-summaries, decision support prompts, and automated next steps.

That creates a gap.

Teams use AI every day.

Leaders cannot always say where it runs, what data it uses, or what happens when it is wrong. That gap is where risk grows.

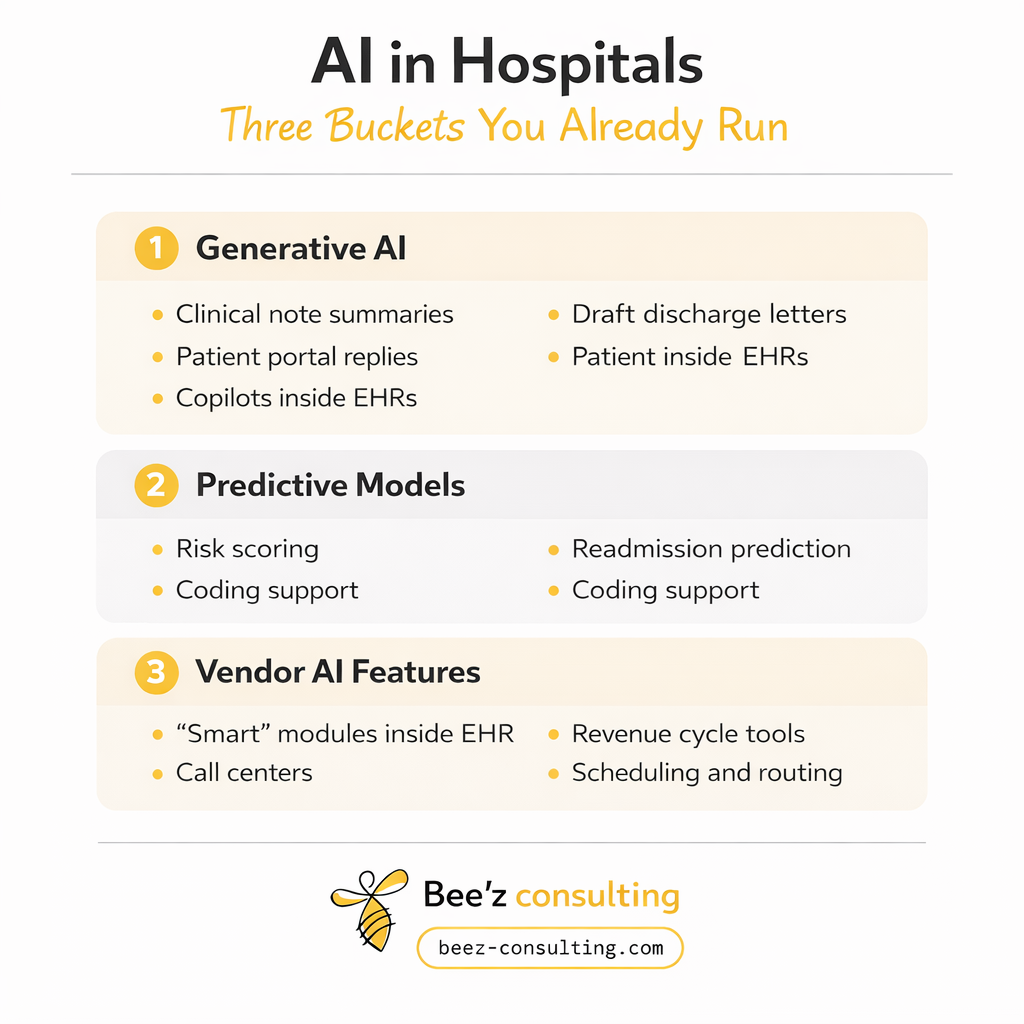

When this post says “AI,” it includes three buckets many hospitals already run.

If your team did not “buy an AI system,” but your vendorrolled out AI features, you still need governance.

BHM Healthcare Solutions is a US healthcare consulting firm that supports provider and payer organizations on strategy, operations, and performance improvement.

Their “2025 in Review” series shares what they areseeing across real-world AI deployments in healthcare.

BHM’s warning is simple: Leaders approved AI as a narrow productivity helper.

In practice, it became part of the system. It moved into documentation,utilization analysis, coverage logic, and admin workflows.

That creates new dependencies and new failure modes.

BHM points to patterns leaders are now seeing:

Leadership test for 2026: stop measuring “how much AI you deployed.”

Start measuring “how clearly you can explain and prove control.”

The EU AI Act lays down rules for AI systems, including obligations for certain higher-risk uses.

It includes requirements tied to governance, documentation, and human oversight.

You do not need to be a lawyer to get the point.

If an AI system can affect health, safety, rights, oraccess, you need to show control. You need clear roles, documented limits, monitoring, and the ability to investigate.

The European Commission states the EHDS Regulation entered into force on 26 March 2025, starting a transition phase toward staged application.

EHDS matters because AI scale depends on data.

If you cannot show where data came from, what permissions apply, and how it moves across systems, you will struggle to scale AI safely. You will also struggle to defend it when questioned.

On 12 February 2025, the Swiss Federal Council said Switzerland intends to ratify the Council of Europe AI Convention and adapt Swiss law as needed. It also stated work will continue on AI regulation inspecific sectors, including healthcare.

Switzerland may not launch one single “Swiss AI Act” right away.

Still, the direction is clear: international alignment, plus sector-ledrules.

Switzerland’s revised data protection framework has appliedsince 1 September 2023.

That affects real deployments today:

Leader translation: if AI touches patient data, you need purpose limits, access controls, and privacy-by-design. You also need a transparency stance you can defend.

The Swiss Federal Office of Public Health notes Switzerland revised medical device rules in line with EU MDR and IVDR, raising safety and quality expectations.

In plain terms: if an AI tool functions as medical device software in your establishment, treat it like regulated tech.

That means risk management, validation, change control, and documentation you can show under review.

DigiSanté aims to introduce standards, specs, and infrastructure to support seamless data exchange.

It also points toward asecure Swiss health data space direction and responsible secondary use.

Even outside the EU, EU rules can still matter in practicewhen you:

Also, the Swiss Federal Council signals an intent to align internationally. That increases the chance that “EU-grade” governance becomes the default expectation in partnerships and procurement.

Many Swiss providers adopt EU-style governance because vendors, partners, and patient flows pull them there.

Boards do not want buzzwords.

They want proof of control. Here are the questions thatmatter.

The EU AI Act includes obligations for AI uses that fall into higher-risk categories.

In healthcare, that can include tools thatinfluence triage, decisions, or patient-facing outputs.

What the board expects: a clear list of these usecases, named owners, and the controls in place.

EHDS entered into force on 26 March 2025 and moves through a transition phase toward staged application.

The practical point is immediate: interoperability, permissions, and traceable provenance are needed for safe scale and for defensible use.

What the board expects: data sources mapped per use case, permissions are clear, and provenance is traceable.

Swiss data protection has applied since 1 September 2023.

That shapes what you can do right now with patient data and AI-enabled workflows.

What the board expects: purpose limits, access controls, and a transparency stance that can be explained simply.

If an AI tool functions as medical device software in your setting, it needs stronger controls.

Validation, risk management, and change control are central.

What the board expects: clear classification thinking, and proof that higher assurance applies where needed.

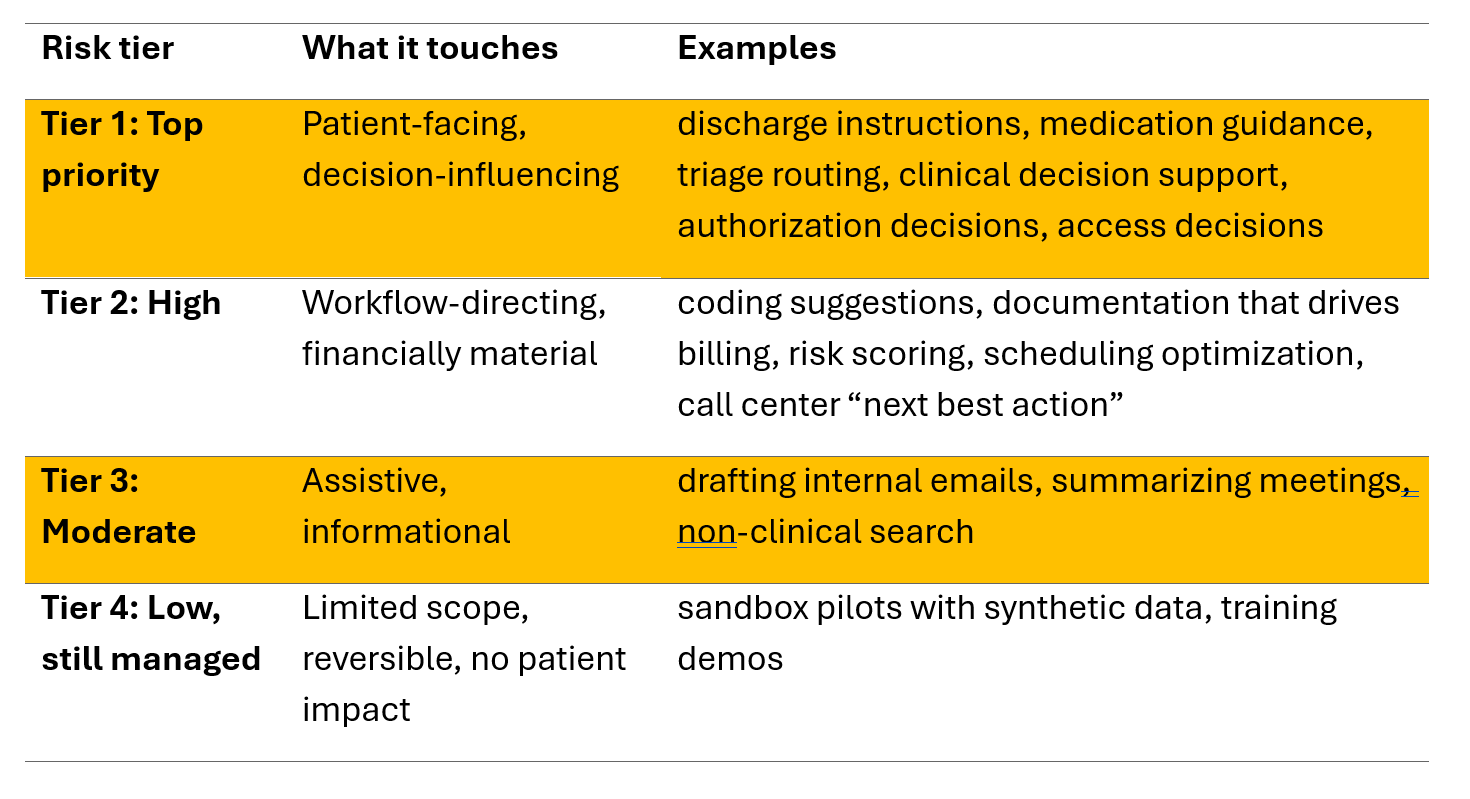

You do not need a complex framework to begin.

Start with four tiers. Use them to decide what to govern first.

Rule of thumb: Tier 1 and Tier 2 must have named owners, written rules of use, monitoring, and an evidence pack ready.

Plan for common failures, not rare edge cases.

These are the ones leaders should expect early:

If AI touches patient comms, documentation, or triage, these failures become clinical and reputational risk, not just “tech bugs.”

This plan is built to fit hospital reality. It focuses on Tier 1 and Tier 2 first.

Output you want by day 30: one list that is complete enough to defend.

For Tier 1 and Tier 2, assign named owners for:

Also document who holds final decision accountability when humans and AI interact.

Output you want by day 45: a one-page ownership map.

For each tool, write one page:

Output you want by day 60: rules of use for your highest-risk tools.

Output you want by day 75: One simple dashboard and a review cadence.

Output you want by day 90: a runbook, plus one table top drill.

For Tier 1 and Tier 2 tools, ask for:

Add contract language for:

Also confirm if the use case could be medical device software in your context, and apply higher assurance where needed.

This aligns with EHDS direction on data governance and reuse, and with Swiss work aimed at better exchange.

If someone asks, “prove control,” you should be able toshow:

Want this under control in 90 days?

Let’s run an AI Governance Sprint built for hospitals, not theory.

In 2 to 3 working sessions, we will deliver:

Share the 5 AI use cases you worry about most.

I’ll translate them into a practical sprint scope, with owners, checkpoints, and a clear first set of controls.

References

1. BHM Healthcare Solutions, “AI governance, not adoption, is now a key leadership test” (2025 in Review, Part 3). [https://bhmpc.com/2026/01/2025-in-review-part-3/ ]

2. European Union, Artificial Intelligence Act, Regulation (EU) 2024/1689, official EUR-Lexpage. [https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng]

3. European Commission, “European Health Data Space Regulation (EHDS)” timeline. Entered into force 26 March 2025. [https://health.ec.europa.eu/ehealth-digital-health-and-care/european-health-data-space-regulation-ehds_en

4. Swiss Federal Council, “AI regulation: Federal Council to ratify Council of Europe Convention,” 12 February 2025 (news.admin.ch). [https://www.news.admin.ch/en/nsb?id=104

5. KMU.admin.ch, “New Federal Act on Data Protection (nFADP),” applicable since 1 September 2023. [https://www.kmu.admin.ch/kmu/en/home/facts-and-trends/digitization/data-protection/new-federal-act-on-data-protection-nfadp.html]

6. Swiss Federal Office of Public Health (FOPH), “Medical devices legislation,” revised in line with EU MDR and IVDR. [https://www.bag.admin.ch/en/medical-devices-legislation]

7. digital.swiss, DigiSanté programme description, standards and infrastructure for data exchange and a Swiss health data space direction. [https://digital.swiss/en/action-plan/measures/design-of-programme-to-promote-digital-transformation-in-the-healthcare-sector]

Anything that generates, predicts, recommends, or triggers automated next steps inside hospital workflows.

That includes:

Rule of thumb: if a feature can influence care, access, documentation, or patient-facing content, put it in the AI register and govern it.

Yes. Many hospitals run AI because vendors ship it inside tools you already pay for—often as auto-summaries, prompts, coding helpers, or workflow automation.

Governance is not about what you purchased. It is about what influences decisions and outputs in your environment.

If leaders can’t say where AI runs, what it uses, and what happens when it’s wrong, risk grows fast.

Use the tier model in the post and start with the top two tiers:

Then do four things first: name owners, write Rules of Use, set monitoring, and prepare the evidence pack for Tier 1–2 tools.

A simple “proof of control” pack that you can pull up in one meeting:

If you can show these, you can answer most credibility questions quickly.

Don’t build a new bureaucracy. Embed AI control into existing routines.

Practical moves that work in real hospital life:

This keeps innovation moving while keeping risk visible and managed.

EU standards can matter in practice when you:

Even when not strictly required, many Swiss providers adopt EU-grade governance because vendors, partners, and patient flows pull them there.

AI governance needs shared ownership, but accountability must be named for Tier 1–2 tools.

A workable split:

Rule of thumb: if you can’t name the owner in 10 seconds, you don’t have governance yet.

AI is already inside hospital workflows. Learn how CEOs, COOs, CIOs, and CTOs can govern it in Switzerland and the EU, and build proof of control fast.

Patient experience starts before the bedside. Fix staff-to-staff handovers with two simple habits that cut friction, boost clarity, and build trust fast.

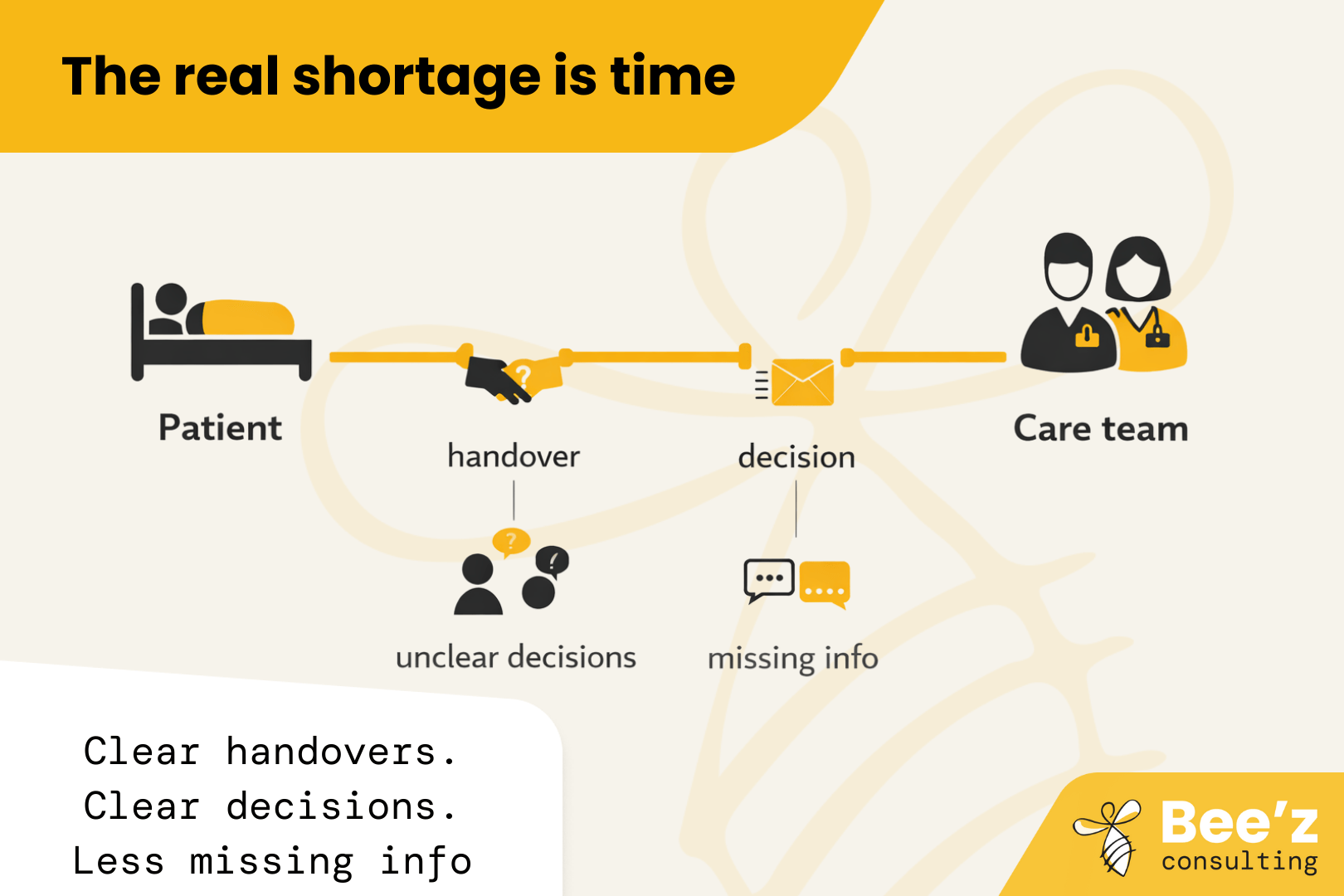

The real shortage is time with patients. “Thrive” is the missing lever. Fix daily workflow friction so hiring and retention finally pay off.